Excerpted from 1995: The Year the Future Began by W. Joseph Campbell (footnotes omitted). Copyright © 2015 by the author and reprinted by permission of University of California Press. All rights reserved. No part of this excerpt may be reprinted, reproduced, posted on another website or distributed by any means without the written permission of the publisher.

Nineteen ninety-five was the inaugural year of the twenty-first century, a clear starting point for contemporary life.

It was “the year of the Internet,” when the World Wide Web entered mainstream consciousness, when now-familiar mainstays of the digital world such as Amazon.com, eBay, Craigslist, and Match.com established their presence online. It was, proclaimed an exuberant newspaper columnist at the time, “the year the Web started changing lives.”

Nineteen ninety-five was marked by a deepening national preoccupation with terrorism. The massive bombing in Oklahoma City killed 168 people, the deadliest act of domestic terror in U.S. history. Within weeks of the bombing, a portion of Pennsylvania Avenue—the “Main Street” of America—was closed to vehicular traffic near the White House, signaling the rise of security-related restrictions intended to thwart the terrorist threat—restrictions that have since become more common, more intrusive, more stringent, and perhaps even more accepted.

Nineteen ninety-five was the year of the sensational and absorbing “Trial of the Century,” when O.J. Simpson, the popular former football star, answered to charges that he had slashed to death his former wife and her friend. The trial stretched across much of the year, not unlike an indelible stain, ending in Simpson’s acquittal but not in the redemption of his public persona. Ironically, the trial’s most tedious stretches produced its most lasting contribution: the Simpson case introduced into popular consciousness the decisive potential of forensic DNA evidence in criminal investigations and legal proceedings.

Nineteen ninety-five brought an unmistakable though belated assertion of muscular U.S. diplomacy, ending the vicious war in Bosnia, Europe’s deadliest and most appalling conflict in forty years. Crafting a fragile peace in the Balkans gave rise to a sense of American hubris that was tragically misapplied not many years later, in the U.S.-led invasion of Iraq.

The year brought the first of several furtive sexual encounters between President Bill Clinton and a nominal White House intern twenty-seven years his junior, Monica Lewinsky. Their intermittent dalliance began in 1995 and eventually erupted in a lurid sex scandal that rocked the U.S. government and brought about the first-ever impeachment of an elected American president.

The emergence of the Internet, the deadliest act of homegrown terrorism on U.S. soil, the “Trial of the Century,” the muscular diplomacy of the United States, and the origins of a sex scandal at the highest levels of American government were significant events in 1995, and each is explored as a chapter of this book. They were profound in their respective ways, and, taken together, they define a watershed year at the cusp of the millennium. Nineteen ninety-five in many ways effectively marked the close of the one century, and the start of another.

With the critical distance afforded by the passing of twenty years, the exceptionality of 1995 emerges quite clearly. It was not altogether obvious at the close of 1995, at least not to commentators in the media who engaged in no small amount of hand-wringing about the year. A writer for the Boston Herald, for example, suggested that history books “may well remember 1995 as one sorry year.” It was, after all, the year when Israel’s prime minster, Yitzhak Rabin, was assassinated at a peace rally in Tel Aviv, in the Kings of Israel Square. It was the year when a troubled woman named Susan Smith was tried and convicted on charges of drowning her two little boys in 1994, of having strapped them in their seats before rolling her car into a lake in South Carolina. Smith at first blamed an unknown black man for having hijacked the car and making off with the boys inside. She recanted several days later and confessed that she had killed her sons.

In a summing up at year’s end, the Philadelphia Inquirer recalled the massacre at Srebrenica in July 1995, when Bosnian Serb forces systematically killed 8,000 Muslim men and boys in what the United Nations had designated a safe area. “So base were the emotions, so dark the actions, that at times you had to wonder which century we were in,” the newspaper declared. “Was it 1995 or 1395? A tribal war with overtones of genocide? We bring you Bosnia and the mass graves at Srebrenica… At least there’s one difference between now and 600 years ago. The killing’s become more efficient.

But not all leave-taking assessments of 1995 were so grim. Reason magazine observed that, at the end of 1995, Americans were living the good old days; they “never had it so good.” Living standards, Reason argued, were higher than ever. Americans were healthier than ever. They had more leisure time than ever. “Americans will swear life is more hectic than it used to be, that there’s not enough time anymore. What’s crowding their lives, though, isn’t necessarily more work or more chores. It is the relentless chasing after the myriad leisure opportunities of a society that has more free time and more money to spend.”

It is striking how a sense of the improbable so often flavored the year and characterized its watershed moments. Oklahoma City was an utterly improbable setting for an attack of domestic terrorism of unprecedented dimension. Dayton, Ohio, was an improbable venue for weeks of multiparty negotiations that concluded by ending the faraway war in Bosnia. The private study and secluded hallway off the Oval Office at the White House were the improbable hiding places for Clinton’s dalliance with the twenty-two-year-old Lewinsky.

The improbable was a constant of the year. In February, a twenty-eight-year-old, Singapore-based futures trader named Nick Leeson brought down Barings PLC, Britain’s oldest merchant bank, after a series of ill-considered and mostly unsupervised investments staked to the price fluctuations of the Japanese stock market. Leeson’s bets went spectacularly wrong and cost Barings $1.38 billion, wiping out an aristocratic investment house that was 232 years old. The rogue trader spent four years in prison in Singapore and wrote a self-absorbed book in which he gloated that auditors “never dared ask me any basic questions, since they were afraid of looking stupid about not understanding futures and options.”

The most improbable prank of the year came in late October when Pierre Brassard, a twenty-nine-year-old radio show host in Montreal, impersonated Canada’s prime minister, Jean Chrétien, and got through by telephone to Elizabeth, the queen of England. Speaking in French and English, they discussed the absurd topic of the monarch’s plans for Halloween as well as the separatist referendum at the end of the month in Quebec, Canada’s French-speaking province. Could her majesty, Brassard asked, make a televised statement urging Quebecers to vote against the referendum? “We deeply believe that should your majesty have the kindness to make a public intervention,” said Brassard, “we think that your word could give back to the citizens of Quebec the pride of being members of a united country.” Replied the queen, after briefly consulting an adviser: “Do you think you could give me a text of what you would like me to say? … I will probably be able to do something for you.” Their conversation was broadcast live on CKOI-FM and went on for seven minutes. Only after it ended did Buckingham Palace realize the queen had been duped.

Nineteen ninety-five was a memorable time for the U.S. space program. NASA that year launched its 100th human mission, sending a crew of five Americans and two Russians into Earth orbit on June 27. They traveled aboard the space shuttle Atlantis, which docked two days later with the Russian space station, Mir, forming what at the time was “the biggest craft ever assembled in space.” When Atlantis returned to Earth on July 7, it brought home from Mir Dr. Norman E. Thagard, an astronaut-physician who had logged 115 days in space, then an American space-endurance record. The year’s most improbable moment in space flight had come about five weeks earlier, on a launch pad at Cape Canaveral. Woodpeckers in mating season punched no fewer than six dozen holes in the insulation protecting the external fuel tank of the shuttle Discovery. As the New York Times observed, the $2 billion spacecraft, “built to withstand the rigors of orbital flight, from blastoff to fiery re-entry,” was driven back to its hangar “by a flock of birds with mating on their minds.” Discovery’s flight was delayed by more than a month.

No celebrity sex scandal that year stirred as much comment and speculation as Hugh Grant’s improbable assignation in Hollywood with the pseudonymous Divine Brown, a twenty-three-year-old prostitute. The floppy-haired British actor, star of the soon-to-be-released motion picture Nine Months, had been dating one of the world’s most attractive models, Elizabeth Hurley, for eight years. On June 27 around 1:30 a.m., Grant was arrested in the streetwalker’s company in his white BMW. “Vice officers observed a prostitute go up to Mr. Grant’s window of his vehicle,” the Los Angeles police reported, and “they observed them have a conversation, then the known prostitute got into Mr. Grant’s vehicle and they drove a short distance and they were later observed to be engaged in an act of lewd conduct.” Grant was chastened, but hardly shamed into seclusion. He went public with his contrition, saying on Larry King’s interview program on CNN that his conduct had been “disloyal and shabby and goatish.” When her agent told her the news about Grant’s dalliance, Hurley said she “felt like I’d been shot.” Their relationship survived, for a few years. They split up in 2000.

“Firsts,” alone, do not make a watershed year. But they can be contributing factors, and 1995 was distinguished by several notable firsts. For the first time, the Dow Jones Industrial Average broke the barrier of 5,000 points. The Dow set sixty-nine record-high closings during the year and, overall, was up by 33 percent. It was a remarkable run, the Dow’s best performance in twenty years. And it had been quite unforeseen by columnists and analysts at the outset of the year. John Crudele, for example, wrote in the San Francisco Chronicle that 1995 “could be the worst year for the stock market in a long time” and quoted an analyst as saying the Dow could plunge to 3,000 or 3,200 points. At year’s end, the Dow stood at 5,117.12.

From Toy Story to the Million Man March

The first feature-length computer-animated film, Disney’s imaginative Toy Story, was released at Thanksgiving in 1995—not long after the prominent screenwriter William Goldman had pronounced the year’s first ten months the worst period for movies since sound. Toy Story told of a child’s toys come to life and won approving reviews. The New York Times said Toy Story was “a work of incredible cleverness in the best two-tiered Disney tradition. Children will enjoy a new take on the irresistible idea of toys coming to life. Adults will marvel at a witty script and utterly brilliant anthropomorphism. And maybe no one will even mind what is bound to be a mind-boggling marketing blitz.”

The year brought at long last the first, unequivocal proof that planets orbit Sun-like stars beyond Earth’s solar system. Extra-solar planets—or exoplanets—had long been theorized, and confirmation that such worlds exist represented an essential if tentative step in the long-odds search for extra-solar intelligent life. The first confirmed exoplanet was a blasted, gaseous world far larger than Jupiter that needs only 4.2 Earth days to orbit its host star in Pegasus, the constellation of the winged horse. It is an inhospitable, almost unimaginable world. The planet’s dayside—the side always facing the host star—has been estimated to be 400 times brighter than desert dunes on Earth on a midsummer’s day. Its nightside probably glows red. The exoplanet is more than fifty light years from Earth and was inelegantly christened “51 Pegasi b.” It was detected by two Swiss astronomers: Michel Mayor of the University of Geneva, and Didier Queloz, a twenty-eight-year-old doctoral student.

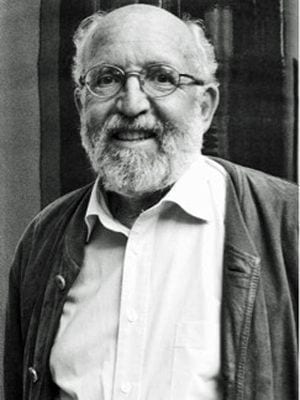

FIGURE 1. In October 1995, Swiss astronomer Michel Mayor

announced the discovery of a planet orbiting a Sun-like star far beyond

the Earth’s solar system. Identifying the first “exoplanet” was a tentative

step in the long-odds search for intelligent life elsewhere in the universe.

[Photo credit: Ann-Marie C. Regan]

Mayor announced the discovery on October 6 at the Ninth Cambridge Workshop on Cool Stars, Stellar Systems, and the Sun meeting in Florence, Italy. About 300 conferencegoers were in attendance as the cordial and low-key Mayor told of finding 51 Pegasi b. He and Queloz had used a technique called “radial velocity,” in which a spectrograph measured slight, gravity-induced wobbling of the host star. His remarks were greeted by polite applause—and by no small measure of skepticism. The discovery of exoplanets had been reported many times since at least the nineteenth century. All such reports had been proved wrong. What’s more, a huge planet orbiting so near to its host posed an unambiguous challenge to the then-dominant theory of planetary formation. A giant planet, it was thought, could not long survive the extraordinarily high temperatures and other effects of being so close to its star. “Most people were skeptical,” Queloz recalled. “The expectation was to find giant planets in long period orbit [that took years, as] in our solar system—we had challenged that paradigm.” Soon enough, however, other astronomers verified the discovery of 51 Pegasi b.

Three months before the conference in Florence, Mayor and Queloz had confirmed the discovery to their satisfaction, in observations at the Observatoire de Haute Provence in France. Doing so, Mayor recalled, was akin to “a spiritual moment.” He, Queloz, and their families celebrated by opening a bottle of Clairette de Die, a sparkling white wine from the Rhone Valley. Since then, the search for exoplanets has become a central imperative in what has been called “a new age of astronomy” that could rival “that of the 17th century, when Galileo first turned his telescope to the heavens.” Animating the quest is the belief that the universe may be home to many Earth-like exoplanets orbiting host stars at distances that would allow temperate conditions, liquid water, and perhaps even the emergence of intelligent life. More than 1,700 exoplanets have been confirmed since the discovery of 51 Pegasi b, and, before it broke down in 2013, the planet-hunting Kepler space telescope had identified since 2009 more than 3,500 candidate-exoplanets.

One of the year’s pleasantly subversive books, a thin volume of short essays titled Endangered Pleasures: In Defense of Naps, Bacon, Martinis, Profanity, and Other Indulgences, posited that simple earthly pleasures were slipping into disfavor and even disrepute. The author, Barbara Holland, wrote that, in small and subtle ways, “joy has been leaking out of our lives. Almost without a struggle, we have let the New Puritans take over, spreading a layer of foreboding across the land until even ignorant small children rarely laugh anymore. Pain has become nobler than pleasure; work, however foolish or futile, nobler than play; and denying ourselves even the most harmless delights marks the suitably somber outlook on life.”

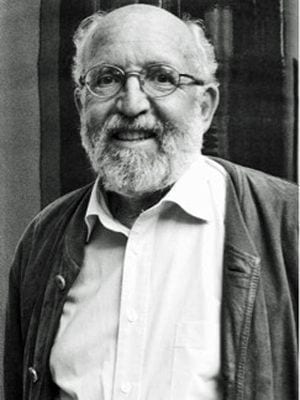

FIGURE 2. Barbara Holland’s Endangered Pleasures was a delightfully

subversive book of 1995. In it, she argued that “joy haws been leaking

out of our lives. Almost without a struggle, we have let the New Puritans

take over.” [Photo credit: Mel Crown]

Holland’s was an impressionistic thesis, and somewhat overstated. But undeniably, there was something to be said for celebrating what made the scolds and killjoys so sour. “Now in the nineties,” she wrote, “we’re left to wring joy from the absence of joy, from denial, from counting grams of fat, jogging, drinking only bottled water and eating only broccoli. The rest of the time we work.” Holland’s wry and engaging musings on topics such as napping, profanity, wood fires, and the Sunday newspaper drew the admiring attention of Russell Baker, a humor columnist for the New York Times and one of the most wry and engaging observers of the national scene.

Baker wrote appreciatively of Holland’s book, saying she “makes her case with a light touch and a refusal to speak solemnly of anyone, even those grimmest of gloom-spreaders, the smoke police” eager to crack down on cigarettes. “Appropriately for an author promoting pleasure in joyless times, Ms. Holland makes her case in a mere 175 pages,” Baker observed. “Books that say their say without droning on at encyclopedia length are also one of our endangered pleasures.” Baker himself achieved a bit of lasting distinction in 1995. His commencement speech that year at Connecticut College in New London—titled “10 Ways to Avoid Mucking Up the World Any Worse Than It Already Is”—sometimes is ranked among the best of its genre. Baker offered such sardonic guidance as: “The best advice I can give anybody about going out into the world is this: Don’t do it. I have been out there. It is a mess.” More seriously, and grimly, he touched on what he considered to be the angry national mood in 1995:

I have never seen a time when there were so many Americans so angry or so mean-spirited or so sour about the country as there are today. Anger has become the national habit. You see it on the sullen faces of fashion models scowling out of magazines. It pours out of the radio. Washington television hams snarl and shout at each other on television. Ordinary people abuse politicians and their wives with shockingly coarse insults. Rudeness has become an acceptable way of announcing you are sick and tired of it all and are not going to take it anymore… The question is: why? Why has anger become the common response to the inevitable ups and down[s] of nation[al] life? The question is baffling not just because the American habit even in the worst of times has traditionally been mindless optimism, but also because there is so little for Americans to be angry about nowadays… So what explains the fury and dyspepsia? I suspect it’s the famous American ignorance of history. People who know nothing of even the most recent past are easily gulled by slick operators who prosper by exploiting the ignorant. Among these rascals are our politicians. Politicians flourish by sowing discontent. They triumph by churning discontent into anger. Press, television and radio also have a big financial stake in keeping the [country] boiling mad.

But it is difficult to look back now at 1995 and sense a deeply angry time. Not when the stock market routinely set record high closings. Not when Friends and Seinfeld were among the most popular primetime fare on network television. Not when the historical fantasy Braveheart won the Academy Award for best motion picture of 1995. Nineteen ninety-five was neither a frivolous nor a superficial time, but real anger in the land was to emerge later, in the impeachment of Bill Clinton in 1998, in the disputed election of George Bush in 2000, and in the controversies that followed the invasion of Iraq in 2003.

Nineteen ninety-five was not without controversy, of course, and some of the year’s most heated disputes were attached to the Million Man March, a huge and peaceful assembly on the National Mall in Washington, D.C., on October 16. The march was the inspiration of Louis Farrakhan, leader of the Nation of Islam, who envisioned the rally as a “holy day of atonement and reconciliation,” an opportunity for black men to “straighten their backs” and recommit themselves to their families and communities.

But Farrakhan’s toxic views and antisemitic rhetoric, as well as the exclusion of black women from the event, threatened to overshadow the march and its objectives. Neither the national NAACP nor the Urban League endorsed the march. For many participants, the dilemma was, as one journalist described it, “whether to march for a message they can believe in—unity—without marching to a drummer they may not follow.” (The Washington Post surveyed 1,047 participants and reported they generally were “younger, wealthier and better-educated than black Americans as a whole,” were inclined to view Farrakhan favorably, and expected him to raise his profile on the national scene.)

The twelve-hour rally itself was joyful, almost festive. Participants spoke of being awed by the turnout and sensed that they had joined a once-in-a-lifetime occurrence. “I can’t begin to explain the beautiful sight I saw when I arrived at the Mall that morning,” recalled André P. Tramble, an accountant from Ohio. “If critics expected the March to be chaotic and disruptive, their expectations were unfounded. I thought, if we could come together in this orderly way and establish some commonsense [principles] of unity, how easily we could solve half of our self-imposed problems.” Neil James Bullock, a mechanical engineer from Illinois, wrote afterward:

I was stunned to see masses of Black men. As far as I could see, there was a sea of Black men. I had never seen so many people in one place at one time, and I imagined I never would see such a sight again. There we were: all shapes, sizes, colors, and ages; fathers with sons, uncles, and brothers. It was truly a family affair… Fathers, sons, brothers, and uncles were all in Washington for one reason—atonement. The question was “atonement for what?” There were many things that one could say African American men need to atone for, not the least of which is the sin of disrespect. We have been disrespectful to our women, families, communities, and most of all to ourselves.

The crowd was addressed by a stream of speakers that included Jesse Jackson, Rosa Parks, Stevie Wonder, and Maya Angelou. Farrakhan spoke for nearly two hours. His oratory at times seemed to wander; he ruminated at one point about the number nineteen, saying: “What is so deep about this number 19? Why are we standing on the Capitol steps today? That number 19—when you have a nine you have a womb that is pregnant. And when you have a one standing by the nine, it means that there’s something secret that has to be unfolded.” He also pledged “to collect Democrats, Republicans and independents around an agenda that is in the best interest of our people. And then all of us can stand on that agenda and in 1996, whoever the standard bearer is for the Democratic, the Republican, or the independent party should one come into existence. They’ve got to speak to our agenda. We’re no longer going to vote for somebody just because they’re black. We tried that.”

It was a spectacular autumn day in the capital, and the turnout for the march was enormous. Just how large became the subject of bitter dispute. Drawing on aerial photographs and applying a multiplier of one person for every 3.6 square feet, the National Park Service estimated the march had attracted about 400,000 people. Farrakhan was outraged: he insisted the crowd reached 1.5 million to 2 million people, and he alleged racism and “white supremacy.” He threatened to sue the Park Service to force a revision of the crowd estimate. Within a few days, a third estimate was offered by a team of researchers at Boston University’s Center for Remote Sensing, who applied a multiplier of one person for every 1.8 square feet. They estimated the crowd size at more than 800,000.

The controversy gradually faded, and Congress soon took the Park Service out of the crowd-counting business, a decision received with more relief than disappointment. “No matter what we said or did, no one ever felt we gave a fair estimate,” said J.J. McLaughlin, the official charged with coordinating Park Service crowd estimates. “It got to the point where the numbers became the entire focus of the demonstration.”

Microsoft Begins to Surge

The year brought a burst of contrived giddiness and worldwide extravagance that would be unthinkable today: that’s because the hoopla was about the release of a computer operating system, Microsoft’s Windows 95. Microsoft reportedly spent $300 million to preface the launch with weeks of marketing hype. So successful was it that, even among computer illiterates, Windows 95 became a topic of conversation.

The company spent millions for rights to the Rolling Stones hit “Start Me Up,” which became the anthem for the Windows 95 launch. It bought out the print run of the venerable Times of London on August 24, the day of the launch, and gave away the newspapers—fattened with advertising for Windows 95. A huge banner, stretching some thirty stories and proclaiming “Microsoft Windows 95,” was suspended from the landmark CN Tower in Toronto. In New York, the Empire State Building was illuminated in three of Microsoft’s four corporate colors—the first time a company’s colors had so bathed the Manhattan skyscraper.

FIGURE 3. Microsoft’s launch of its Windows 95 computer operating

software in late August 1995 was preceded by weeks of hype and

hoopla in the United States and abroad.

Not since “the first landing on the moon—or, at any rate, since the last Super Bowl—has America been more aflutter,” a U.S.-based correspondent for London’s Independent newspaper wrote about the Windows 95 fuss, his tongue decidedly in cheek. It was easy to lampoon the hype. After all, said the New York Times a few weeks before the launch, “It is not a lunar landing or the cure for disease. It is simply an improved version of a computer’s operating system—like a more efficient transmission for a car.” Barely heard above the din was Apple Computer’s rather cheeky counter-campaign, which pointed out that many features of Windows 95 were already available on Macintosh machines. “Been there, done that,” Apple proclaimed in its publicity. Even so, Apple in 1995 commanded only about 8 percent of the computer market.

The hoopla was as inescapable as Microsoft’s objective was obvious—to establish Windows 95 as the industry standard and encourage users to move from the older Windows 3 platforms and promptly embrace the new operating system. The big day finally came and, just after midnight on August 24 in Auckland, New Zealand, a nineteen-year-old student named Jonathan Prentice made the first purchase of Windows 95. As midnight approached in New York City, “a jostling scrum of technophiles” was reported outside a mid-Manhattan computer store, waiting to buy the software as it went on sale. But it was not as if consumers were strung out in blocks-long lines to buy the product. At some computer stores that opened at midnight on August 24, employees had the place pretty much to themselves thirty minutes or so after Windows 95 went on sale.

After the initial surge in late summer, sales of Windows 95 began to tail off. By December 1995, sales were about a third of what they had been in September. Many consumers, it turned out, chose not to buy and install the software themselves—an often-exasperating task—but waited to adopt it when it came bundled with their next computers. Even so, the launch of Windows 95 easily qualified as “the splashiest, most frenzied, most expensive introduction of a computer product” up to that time, as a New York Times writer put it. The manufactured extravagance was more than a little silly, but the product did turn out to be a memorable one. Nearly twenty years later, technology writer Walter Mossberg included Windows 95 as one of the dozen “most influential” technology products he had reviewed for the Wall Street Journal since the early 1990s.

Some of the launch-related giddiness can be laid to consumers’ embrace of the personal computer as a mainstay device in the workplace and at home. By 1995, a majority of Americans were using computers at home, at work, or at school, the Times Mirror Center for the People & the Press reported. The organization figured that eighteen mil- lion American homes in 1995 had computers equipped with modems, an increase of 64 percent from 1994. The popularity of the computer and the prevalence of modems helped ignite dramatic growth in Internet use in the years following 1995.

The hoopla over Windows 95 was also illustrative of the hyperbole afoot in 1995 about things digital. The hype spawned sweeping predictions, such as those by James K. Glassman, a syndicated columnist who wrote that computers “could displace schools, offices, newspapers, scheduled television and banks (though probably not dry cleaners). Government’s regulatory functions could weaken, or vanish… Even collecting taxes could become nearly impossible when all funds are transferred by electronic impulses that can be disguised.” Even more expansive was Nicholas Negroponte, founding director of MIT’s Media Lab and a prominent tech guru in 1995. Negroponte argued that the real danger was to be found in not enough hype—in understating the importance of the emergent digital world. “I think the Internet is one of the rare, if not unique, instances where ‘hype’ is accompanied by understatement, not overstatement,” he said shortly before publication of his best-selling book, Being Digital. The book was a provocative clarion call, an assertion that a profound and far-reaching transformation was under way. “Like a force of nature,” Negroponte wrote, “the digital age cannot be denied or stopped.”

His book, essentially a repurposing of columns written for Wired magazine, was not a flawless road map to the unfolding digital age; it was not a precision guide to what lay ahead. Negroponte, for example, had almost nothing to say about the Internet’s World Wide Web. Some of the book’s bold projections proved entertainingly wrong, or premature by years. Negroponte asserted that it was not at all far-fetched to expect “that twenty years from now you will be talking to a group of eight-inch-high holographic assistants walking across your desk,” serving as “interface agents” with computers. He suggested that by 2000 the unadventurous wristwatch would “migrate from a mere timepiece today to a mobile command-and-control center tomorrow… An all-in-one, wrist-mounted TV, computer, and telephone is no longer the exclusive province of Dick Tracy, Batman, or Captain Kirk.”

In other predictions, Being Digital was impressively clairvoyant— spot on, or nearly so. Negroponte anticipated “a talking navigational system” for automobiles. He discussed a “robot secretary that can fit in your pocket,” something of a nod to the Siri speech-recognition function of the contemporary iPhone. He rather seemed to foresee iPads, or Smartphones with bendable screens, in ruminating about “an electronic newspaper” delivered to “a magical, paper-thin, flexible, water- proof, wireless, lightweight, bright display.” Video on demand, he wrote, would destroy the video-rental business. “I think videocassette rental stores will go out of business in less than ten years,” a prediction not extravagantly far off.

Negroponte notably anticipated a profound reshaping in the access to news, from one-way to interactive. Invoking “bits,” the smallest components of digital information, he wrote: “Being digital will change the nature of mass media from a process of pushing bits at people to one of allowing people (or their computers) to pull at them. This is a radical change.” He was hardly alone in foreseeing the digital challenge to traditional mass media. In February 1995, Editor & Publisher, the newspaper industry’s trade publication, had this to say in a special section devoted to “Interactive Newspapers”: “The Internet may be a tough lesson that some newspapers won’t survive. It is more than just another method of distribution. It represents an enormous opportunity in the field of communications that’s neither easy to describe nor easy to grasp.” The emergent online world, the article noted, “is truly the Wild West. Only this time there are a lot more Indians and they aren’t about to give up the land. They are dictating to us what they want to see in our online offerings.”

Such warnings were largely unheeded. No industry in 1995 was as ill-prepared for the digital age, or more inclined to pooh-pooh the disruptive potential of the Internet and World Wide Web, than the news business. It suffered from what might be called “innovation blindness”: an inability, or a disinclination, to anticipate and understand the consequences of new media technology. Leading figures in American journalism took comfort in the comparatively small audiences for online news in 1995. Among them was Gene Roberts, the managing editor of the New York Times who, during the 1980s, had become a near-legend in American journalism for transforming the Philadelphia Inquirer into one of the country’s finest daily newspapers. In remarks to the Overseas Press Club meeting in New York in January 1995, Roberts declared: “The number of people in any given community, in percentage terms, who are willing to get their journalism interactively is— blessedly, I say as a print person—very slim and likely to be for some time.” Roberts was right, but not for long. Just 4 percent of adult Americans went online for news at least once during the week in 1995. But that was the starting point: within five years, more than 20 percent of adult Americans would turn regularly to the Internet for news.

FIGURE 4. Internet adoption. Internet usage has surged in America

since 1995, when just 14 percent of adults were online. By 2014, that

figure had reached 85 percent. [Source: Pew Research Center.]

The Internet’s challenge to traditional media was not wholly ignored or scoffed at in 1995. In April, representatives of eight major U.S. newspaper companies announced the formation of the New Century Network. It was meant to be a robust, collaborative response to the challenges the Internet raised. As it turned out, New Century was emblematic of traditional news media’s confusion about the digital challenge. “In the spring of 1995,” the New York Times later observed, “a partnership of large newspaper companies formed the New Century Network to bring the country’s dailies into the age of the Internet. Then they sat down to figure out what that meant.”

New Century’s principal project was a Web-based news resource called NewsWorks, through which affiliated newspapers, large and small, would share content. But NewsWorks was seen as competitive with the online news ventures that member newspaper companies had set up on their own. In March 1998, New Century was abruptly dissolved, an abject failure that has been mostly forgotten.

Wildly popular among some mid-sized American newspapers in 1995 was a fad known as “public” or “civic” journalism. It was a quasi-activist phenomenon in which newspapers presumed they could rouse a lethargic or indifferent public and serve as a stimulus and focal point for rejuvenating civic culture. The wisdom of “public” journalism was vigorously debated by news organizations, but the disputes were largely beside the point. In a digital landscape, audiences could be both consumers and generators of news. They would not need news organizations to foster or promote civic engagement. Whatever its merits, “public” journalism suggested how far mainstream journalism had strayed from truly decisive questions in the field in 1995.

Smoking Proved a Killer, OJ Simpson Proved Innocent

None of the missteps by American news organizations in 1995 was as humiliating as network television’s capitulation to heavy-handed tactics of the tobacco industry. In August 1995, ABC News publicly apologized for reports broadcast on its primetime Day One news program in 1994 that tobacco companies Philip Morris and R.J. Reynolds routinely spiked cigarettes by injecting extra nicotine during their manufacturing. The companies sued for defamation; Philip Morris asked for $10 billion in damages, and R. J. Reynolds sought an unspecified amount.

To settle the case—which some analysts said the network stood a strong chance of winning—ABC agreed to the apology, which was read on its World News Tonight program and at halftime of an exhibition game on Monday Night Football. Philip Morris celebrated its victory by placing full-page advertisements in the New York Times, the Wall Street Journal, and the Washington Post. “Apology accepted,” the ads declared in large, bold type. The ads incorporated a facsimile of ABC’s written apology, which read in part: “We now agree that we should not have reported that Philip Morris adds significant amounts of nicotine from outside sources. That was a mistake.”

Fears were that ABC’s climb-down would exert a chilling effect on aggressive coverage of the tobacco industry—fears that seemed to be confirmed when CBS canceled plans in November 1995 to broadcast an explosive interview in which Jeffrey S. Wigand, a former tobacco industry executive, sharply criticized the industry’s practices. Wigand was the most senior tobacco industry executive to turn whistleblower. But the network’s lawyers feared CBS could be sued for billions of dollars for inducing Wigand to break a confidentiality agreement that barred disclosure of internal information about his former employer. No news organization had ever been found liable on such grounds. But the lawyers were adamant, and the interview with Wigand was pulled.

The interview was to have been shown on the newsmagazine program 60 Minutes, which prided itself on its aggressive and challenging reporting. Mike Wallace, the star journalist of 60 Minutes who had interviewed Wigand, said the ABC News settlement and apology had figured decisively in the CBS decision. “It has not chilled us as journalists, but it has chilled lawyers,” Wallace said, adding, “It has chilled management.” Wigand, formerly the vice president for research and development at Brown & Williamson Tobacco Corporation, said in the interview that his former employer included in pipe tobacco an additive suspected of causing cancer in laboratory animals. Wigand also accused the chief executive of Brown & Williamson of lying to Congress about the addictive nature of cigarettes.

Months later, after the Wall Street Journal published details of Wigand’s deposition in a lawsuit in Mississippi, CBS finally put the suppressed interview on the air. By then, its impact had been blunted, and CBS was subjected to withering criticism for its timidity. “It is a sad day for the First Amendment,” said Jane Kirtley, the executive director of the Reporters’ Committee for Freedom of the Press, “when journalists back off from a truthful story that the public needs to be told because of fears that they might be sued over the way they got the information.”

But nothing in 1995 invited more media self-flagellation than coverage of the double-murder trial in Los Angeles of O.J. Simpson, the former professional football star and popular television pitchman. The trial was the year’s biggest, most entrancing, yet most revolting ongoing event. Simpson stood accused of fatally stabbing his estranged wife, Nicole, and her friend, Ronald Goldman, in June 1994 outside her townhouse in the Brentwood section of Los Angeles. The trial’s opening statements were delivered in late January 1995, and the proceedings stretched until early October, when Simpson was found not guilty on both counts.

Coverage of the Simpson trial was unrelenting, often inescapable, and occasionally downright bizarre—as when television talk-show host Larry King paid a visit in January 1995 to the chambers of Lance Ito, the presiding judge. A reporter for the Philadelphia Inquirer recalled King’s visit this way:

After receiving a private audience with the judge in chambers, King bounded into court like an overheated Labrador retriever, drooling over the famous lawyers and waving to the audience as if he were the grand marshal at a parade… After yukking it up with the lawyers, he eagerly shook hands with the judge’s staff, the court reporters and everyone else in sight. Only when he approached Simpson did King learn that in Superior Court, even celebrity has limits. As King extended his hand with a hearty “Hey, O.J.!” two surly sheriff’s deputies stepped between them and firmly reminded the TV host that double-murder defendants aren’t allowed to press the flesh. King smiled and shrugged sheepishly. Simpson smiled and shrugged back.

Then, seeing no one left to shake hands with, the famous man turned to leave. Unfortunately, he picked the wrong door, walking toward the holding cell where Simpson is held before court. Deputies turned him around, pointing to the exit, where King paused to wave again before departing. “So long, everybody,” he said.

Howard Rosenberg, a media critic for the Los Angeles Times, was not exaggerating much when he wrote in the trial’s aftermath: “We in the media have met the circus, and we are it.”

Nor was humor columnist Dave Barry entirely kidding when he addressed the Simpson case in his year-end column, observing that the news media “were thrilled to have this great big, plump, juicy Thanksgiving turkey of a story, providing us with endless leftovers that we could whip up into new recipes to serve again and again, day after day, night after night… Of course you, the public, snorked these tasty tabloid dishes right down and looked around for more. Not that you would admit this. No, you all spent most of 1995 whining to everybody within earshot how sick and tired you were of the O.J. coverage.”

At times, the coverage seemed to scramble the hierarchy of American news media. On separate occasions in December 1994, for example, the New York Times quoted unsourced pretrial reports that appeared in the supermarket tabloid National Enquirer. The Times was taken to task for doing so. The tabloid press seemed most familiar and comfortable with the grim tawdriness of the Simpson saga, which was overlaid with wealth, celebrity, race, spousal abuse, courtroom posturing, and no small amount of bungling by police and prosecutors.

In the end, the case left little lasting influence on American jurisprudence. But it was, as James Willwerth of Time magazine put it, the “Godzilla of tabloid stories.” The National Enquirer assigned as many as twenty reporters to the story and sometimes offered up its most extravagant prose. For example, it said in describing the slaying of Nicole Simpson: “The night ended with the bubbly blonde beauty dead in a river of blood on her front doorstep—her throat slashed, her body bludgeoned, her face battered and bruised.” As the trial got under way, the Columbia Journalism Review observed that the biggest secret wasn’t Simpson’s guilt or innocence. The biggest secret was that “so many reporters were reading the National Enquirer religiously.”

When the trial ended on October 3, 1995, with announcement of the verdicts, many tens of thousands of Americans made sure to find a place in front of television sets or radio receivers—signaling how analog-oriented, how tethered to traditional media most Americans were in 1995. But even then, that dependency was undergoing gradual but profound alteration. Live coverage of the Simpson verdicts would be one of the last major national events in which the Internet was only a modest source for the news. As the passage of twenty years has made clear, the most important media story of 1995 was not the Simpson trial but the emergence into the mainstream of the Internet and the browser-enabled World Wide Web.

The highly touted and much-anticipated “information superhighway”—expected to be a complex environment of high-speed interactive television, electronic mail, home shopping, and movies on demand—fell definitively by the wayside in 1995, overtaken and supplanted by the Internet and the Web. “Nowadays, no one mentions the you-know- what without referring to it as the ‘so-called’ Information Superhighway,” the San Francisco Examiner noted at year’s end. “The buzz has clearly shifted to the Internet.”

The Web in 1995 was primitive by contemporary standards, but its immense potential was becoming evident. As we shall see in Chapter 1, the Internet’s exceptional vetting capacity—its ability to swiftly and effectively debunk half-baked claims and thinly sourced news reports— was dramatically displayed in 1995. Amorphous fears about Internet content likewise became prominent in 1995, giving rise to an ill-advised and ill-informed effort by Congress to inhibit speech online.

There also was a growing sense that the Web had much more to offer, and a three-day trade show in Boston called Internet World 95 provided a glimpse of what it soon might look like. The trade show took place in late October and attracted 32,000 visitors—nearly three times the turnout at a similar conference in 1994. It featured demonstrations of online audio news programming, live netcasts of sporting events, and long-distance telephone calls over the Internet. “All the ways of using the Internet today are so rudimentary in terms of what they’ll be like in a few years,” said Alan Meckler, chief executive of the trade show’s sponsoring company, MecklerMedia Corporation. Meckler said the trajectory of the Internet would be like moving “from a horse-drawn carriage to a supersonic jet.” It was a prescient observation, and in 1995 that trajectory brought the Internet into the mainstream.

![Call for Papers: All Things Reconsidered [MUSIC] May-August 2024](https://www.popmatters.com/wp-content/uploads/2024/04/all-things-reconsidered-call-music-may-2024-720x380.jpg)